Throughout all of human history there have been many sources of inspiration. From mother natures endless perplexity to a famous muse humans have looked to many different things to learn and be influenced.

However none of them have ever really had any type of intelligence other than our own, human intelligence. Even today while we strive to build the fastest, most grandiose new inventions such as AI, the leading experts turn to essentially copying or mimicking our own prefrontal cortex.

But to do so misses a crucial opportunity that we as an entire race have never had. The chance to learn from another intelligent species.

Forcing AI To Comply

Currently when we built AI most usually feed it two sources of information, a question and then its answer. Like teaching a child what a cat is we show it a picture of a cat and say “cat”.

A human child only needs a few of these Q&A samples before it can make “cat” predictions extremely accurately and even generalise too. Show it a picture of a totally different species of cat and the child will still correctly identify it.

With our current AI technology, correctly identifying a “cat” still takes thousands or even millions of “questions and answers” to teach it properly. This is of course getting better as technology tends to do, but at it’s core we’re still quite often forcing the AI to learn and do things the way we do them.

We force AI to mimic how we go about solving problems because as an arrogant species we assume it’s the only way. While there are a few examples of AI researchers allowing the machine to teach itself, it’s not usually how things are developed in most cases.

We’re essentially trying to shortcut our way to making the machine “smart” by saying look, we already know how to solve this problem so just memorise all the rules and notes we’ve come up with over the years and then you’ll be an A+ student quick smart.

However as you might already know, simply knowing the answer doesn’t make for a very solid “tree trunk” of knowledge. You don’t understand, you’re just memorising and when it comes to a new, slightly different problem you’re screwed!

It also misses out on a far more important aspect, being able to make new, never before seen discoveries.

First Principles Or GTFO

In Science and physics specifically, there is the notion of building up or calculating something from “first principles“. A calculation or idea is said to be from first principles if it starts directly at the basic, well established laws of physics and moves up from there. That is, building up your case or knowledge on the grounds of implicit facts and basic principals. You don’t “assume” things or go off esoteric data you collected, you prove your point logically and with explicit facts one step at a time.

Having full knowledge of how something works right from first principles implies that you fully understand everything from start to finish. This full understanding means that you can adapt to new scenarios and use those basic principles and rules to calculate and know the answer to never before seen problems.

This being able to handle unseen problems that are similar to other known problems is called generalisation in AI. With greater understanding of lower level principles like what a ball is, what gravity is, why it exists and the raw physics laws that govern them AI is able to generalise more and more.

As such when we teach AI we should really be teaching it from first principles just like we should teach children. You don’t want your kid to just memorise a bunch of facts, you want them to understand the raw physics and meanings behind the world so they can use their own intelligence to generalise and even improve on those concepts.

We shouldn’t be showing AI the question, answer and then seeing if it integrated that information well. We should be teaching it core knowledge about the world so it can generalise across broad topics. This is very hard to do though so I understand why most don’t.

Now to be fair, there are some AI researchers who are trying to do this. What this style of teaching AI builds is called Artificial General Intelligence (AGI) which is an AI that can generalise across multiple disciplines just as humans do. Currently most pursue Artificial Narrow Intelligence (ANI) which is an AI that can be super human at just one single task like finding the best driving route or predicting cancer from a biopsy.

A New Species

With all this said, I think the biggest and as yet most untapped potential of AI lies in it teaching itself and then us. When we feed AI question and answer style data and it becomes super human at doing just one task (eg. driving cars), this is of course very impressive.

Having a computer fully automate various tasks has and will continue to revolutionise and disrupt every industry out there. However when we change to having the AI teach itself from completely unlabelled and unorganised data even more astonishing things happen. It starts to teach us!

Unlabelled data is basically just a fancy AI term for real world data. It’s like handing an AI a Wikipedia page. You’re not putting the data in special tables or marking things as questions and answers that are right or wrong. You’re just simply providing raw data as it is. It then has to figure out what’s right and wrong, useful or irrelevant all on its own just like you or I would.

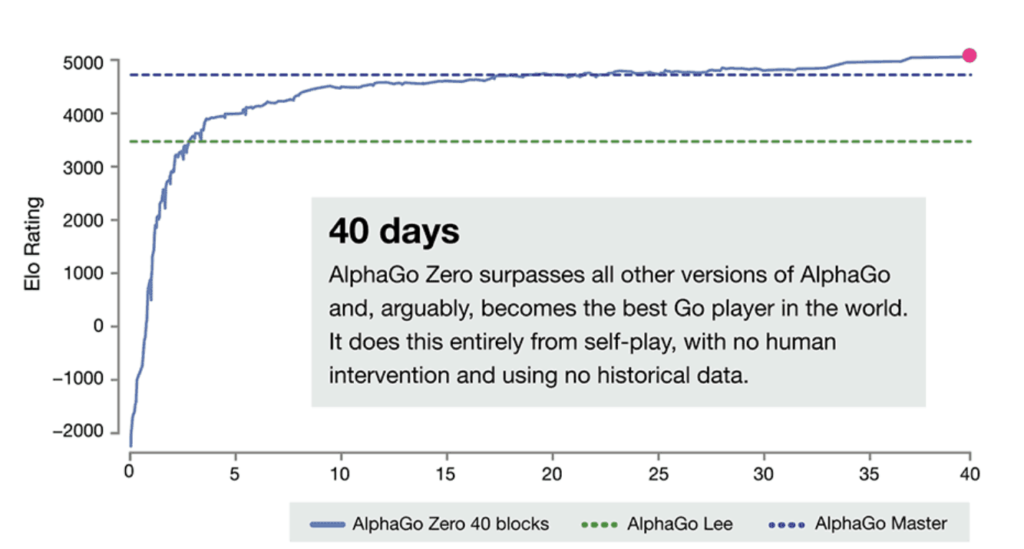

Obviously this makes teaching and programming the AI far harder, but the results can be well worth it. The most public example of this is the Google / Deepmind work of AlphaGo Zero. While this AI was created just like many others to play the Chinese game Go, the thing that made this one different is that it was taught entirely from self-play with no human intervention or previous human data.

This is an intelligence that is entirely new and separate to the human species. Something we’ve never really had the ability to study and learn from before until now. Yes it’s only for the narrow, specific case of the game of Go, but it still learnt and became a master at it entirely without any human intelligence or influence.

Consider if an alien came to Earth in a UFO. Green, tentacles everywhere and clearly not human but then said “hey, we’ve got that Go game on our planet too, let’s play!“. You would have human and alien playing each other and teaching each other their ways of winning that they’ve developed separately from each other. This is exactly what AlphaGo Zero is!

Now imagine if there was an AI that was able to teach itself physics without any human interaction or information. Imagine if it got better at physics than any other human out there and discovered new sub atomic particles or developed basic laws of the universe. It could “self-play” for 100 days on a few super computers and potentially come up with the grand unifying theory that combines the Quantum and Newtonian worlds all in one equation.

Or maybe it figures out how to get around the seemingly hard coded speed limit of the universe, the speed of light. These types of discoveries that AI could potentially one day make would utterly dwarf the cheap tricks they do now and teach us astonishing things. We wouldn’t even have to develop AGI specifically to get these benefits.

We Need To Learn From AI Now

Never before has the human race had another intelligent species be able to teach us something. Never before has any other entity been able to generate new ways of solving complex problems that we haven’t come up with ourselves. About the closest thing to this is us copying what mother nature does in various things but this isn’t really an intelligence, it’s just evolution.

As excited as I am about AI and its future, from Self Driving Cars to the new drug discoveries or even having it control robots, they all pale in comparison to AI teaching us humans new knowledge that it finds.

If you are programming AI out there or know someone who does, take a minute to really consider if building another AI to recommend content is the best use of your time. Instead make the AI teach itself from first principles and with no human interactions. Feed it real world data rather than labelled data and concentrate on making it super human so that afterwards you can see what it’s doing differently to us humans as those differences will be the new, never before discovered pockets of gold.

They’ll also be a great chance to have a completely fresh perspective on solving that specific problem, kind of like an alien coming down from above and sharing with us their ways they’ve developed over the aeons.

The benefits include: 1) How to get those silky smooth videos that everyone loves to watch, even if you're new 2) How to fly your drone, from taking off to the most advanced flight modes 3) Clear outlines of how to fly with step-by-step instructional demonstrations and more 4) Why flying indoors often results in new pilots crashing their drone 5) What other great 3rd party apps are out there to get the most out of your drone 6) A huge mistake many pilots make when storing their drone in the car and how to avoid it 7) How to do all of these things whilst flying safely and within your countries laws.